ACM.80 Protecting data and encryption keys in memory and in use

This is a continuation of my series of posts on Automating Cybersecurity Metrics.

Enclaves, Trusted Execution Environments, and Trusted Platform Modules

"I can put whatever I want in that box and you won't even know."

One of the product managers at a company I used to work for told me that the former head of engineering said something to that effect to him when he was asking for changes to a security product. I wasn’t in the room when this statement was apparently uttered, so I cannot speak to the veracity of the matter.

However, it does make you think about the security of software within a system with custom hardware, many software components, and complex embedded systems programming. How do you know that someone hasn’t inserted some rogue code or hardware changes into the operating system or one of the components included on the system? In fact, hardware vulnerability exploitation is on the rise. Who is checking or even understands hardware vulnerabilities that may exist in systems on which you are running your code?

63% of organizations face security breaches due to hardware vulnerabilities

That particular person was not happy that I was hired to help them connect their products to the cloud. In my interview he said to me, “Don’t screw it up.” Very comforting when you’re about to come in and work with a person on a new project right?

Well, that person left the company before I came on board. Ironically, even though he was not happy about moving that particular product to the cloud according to people who hired me, he went to work for a cloud provider after he left. He even told me in my interview that a different cloud provide was better than the one for whom he went to work so I was surprised by that move.

I don’t really know that person and maybe his words were misconstrued. I mainly mention that comment to get you thinking about what someone could put into a software or even a hardware system when people aren’t really paying attention to all the details or don’t even understand the implication of certain implementations within a system architecture. The product manager in question certainly wasn’t going to be doing a code or hardware review.

Now that person, who allegedly indicated that he could “put anything in the box” and the product manager wouldn’t even know — is working for a major cloud provider, and likely on the hardware on which your systems operate. Hopefully he was just talking and he wouldn’t actually follow through on that threat, or perhaps it was a misunderstanding.

But let’s say there was someone at a cloud provider who had malicious intentions. Hopefully AWS has sufficient processes in place to prevent infiltration of rogue code and hardware vulnerabilities. Based on many presentations I’ve seen at AWS re:Invent, I think they do a lot of code and architecture reviews to prevent security problems. But what if they miss something? What can you do about that?

What if someone slips some code into the operating system that allows that person to remotely trigger actions on an Internet-connected VM? When I started at the security vendor I thought — what if someone tried to insert some code that accessed the AWS credentials on the device that were used to communicate with and run commands AWS in our account? Also, the appliance product was tested in China, something I recommended against. What if someone in China found a vulnerability, which now has to be reported to the Chinese government before telling anyone else according to a new Chinese law?

China's New Data Security Law Will Provide It Early Notice Of Exploitable Zero Days

Or what if malware gets onto your VM and then can access the data on it? Maybe it’s not a rogue user but some vulnerability that allows malware to cause a system crash that dumps memory — how many attackers get access to sensitive data. Or perhaps they can inject fileless malware into a process in memory that can then access anything in memory related to that process.

How can we defend against such potential threats?

Maybe a secure enclave can help. That, of course, depends on the implementation details of the enclave.

Architecting a Secure IoT Solution that leverages encryption keys

I was tasked at a security vendor with architecting a solution to allow firewalls to connect to a cloud provider. The firewall configurations for many locations could be managed by customers from the cloud and their logs would be stored in the cloud. The team I was managing had only previously built products with management interfaces that exist only in private, on-premises networks.

I was concerned about lack of knowledge of attacks on public facing web systems. As it turned out, this concern was well founded when I learned that the developers were using the session ID as the name of files downloaded from the system. The team later set up a CORS policy with a (*) to overcome issues accessing micro-services from a reverse proxy. The team had a few things to learn but there were very capable. It wasn’t an issue with their abilities, just that I had to explain a few things to them along the way that they had not dealt with before.

Due my concerns about a distributed, Internet-facing system for IOT sensor data and communications, I opted to use AWS IOT for device connections. By leveraging AWS IOT, developers who worked at AWS would architect one side of the solution. They should have experience with building a secure service in the cloud — more than my team which had never done such a thing before.

Although my team was very smart and some were more experienced than me in certain aspects of their product — it was just riskier to have them build this out because the Internet component and working in a shared virtual environment was new to them and the company was in a hurry to make the move.

I had limited time to explain all the details I’ve been writing about in this blog series. As you can see I’ve published posts for 80 days now and nowhere near close to deploying what I set out to deploy because I’m taking the time to explain all the security implications, threat model, attack vectors, security concepts and gotchas along the way. How could I find the time to explain all of this to my team and get the project done in the timeframe the executives wanted? Leveraging AWS IOT helped reduce the time it would take to deliver the solution and transferred some of the security risk to AWS.

As it turns out, my team had to rewrite some of the embedded systems client software. That is an area where some of them had years of experience. According to experienced architects on the team, the AWS IoT client was more like a proof of concept that a piece of usable working code that could be put into production. I’m sure it’s better now, but make sure you have security-conscious, experienced embedded systems programmers if you’re working on an IoT solution.

Protecting Private Keys with Hardware

With AWS IOT devices can authenticate to AWS with a certificate.

I explain asymmetric and symmetric authentication at a high level in the book at the bottom of my post, but essentially for this implementation we used asymmetric encryption with a public, private key pair to authenticate the device to AWS and exchange a shared key for symmetric encryption and credentials used for data exchange after that initial key exchange.

The thing about the private key used for the initial key exchange was that if anyone else could obtain the private key that belonged to that device, they could impersonate that device. They would then be able to connect to the customer’s cloud account, view customer data, and change firewall configurations. They might even be able to somehow obtain access to our vendor AWS account by performing rogue actions with those credentials. It was imperative to keep that key private and secure.

What if someone at the security vendor inserted code into the embedded systems on the device to access the private key? What if malware got onto the firewall and executed commands to obtain the key from disk or in memory? What if an attacker obtained a developer’s credentials and wrote code to steal the private key from the device? What if a valid process on the system was got infiltrated with rogue code that captured the key and unencrypted data from memory? How would we even know?

This is why I wanted to use a special hardware component integrated into the hardware called a trusted platform module (TPM). That component was integrated into the device to protect the private key and the symmetric key exchanged with cloud systems. Microsoft Windows uses a TPM to protect secrets when one exists on the hardware running the Windows operating system.

How Windows uses the TPM - Windows security

Essentially the keys would be stored in hardware and processed by a separate physical component. The other code on the device would not have any access to the hardware component (memory or storage) that handled the encryption keys and the encrypting and decrypting of the asymmetric key exchanged with AWS. The data would enter the component encrypted, be decrypted within the component and processed, and then re-encrypted before it left the component. APIs existed to integrate with this hardware component which allowed programmers to interact with it.

A TPM existed on those firewalls but at the time I worked at the company they were not in use. There was a lot of discussion about employing the component but up to that point the head of engineering that subsequently left the company had not prioritized its use. I no longer work for the company so I don’t know if the devices use TPMs or not. As with anything you may have to pay more to get additional security for devices that have and use that functionality.

How is a TPM related to an Enclave?

This concept of having a separate hardware or sometimes software component to segregate management of encryption, decryption, and processing of highly sensitive data you don’t want in shared memory on a system is the reason enclaves exist. There are different names for for these types of components.

Azure was actually the first cloud provider to introduce this concept with their trusted execution environment (TEE) which is part of Azure confidential computing.

Azure Confidential Computing - Protect Data In Use | Microsoft Azure

From the Microsoft website:

Confidential computing is an industry term defined by the Confidential Computing Consortium (CCC) - a foundation dedicated to defining and accelerating the adoption of confidential computing. The CCC defines confidential computing as: The protection of data in use by performing computations in a hardware-based Trusted Execution Environment (TEE).

GCP uses virtual trusted platform modules in their shielded VMs (virtual meaning software, not hardware):

Virtual Trusted Platform Module for Shielded VMs: security in plaintext | Google Cloud Blog

AWS has introduce similar functionality with Nitro enclaves. Are enclaves hardware or software? It sounds like a combination of the two but it’s not exactly clear.

Nitro moves some VM capabilities into hardware. If you want to technical details explaining how AWS Nitro works this is one of my favorite videos on the topic:

AWS offers a Nitro TPM as well. More on AWS Nitro Enclaves below.

The main point of these components is to ensure no other malware or code on the system can access your encryption keys and very sensitive data by keeping it on completely separate hardware (typically). Software implementations of these types of components also exist but generally hardware is considered to be more secure and harder to tamper with.

That said, more and more hardware vulnerabilities are coming into existence and becoming more of a concern for organizations that process highly sensitive data. So you can’t assume just because you are using hardware it’s secure. Companies like the major cloud providers need to be diligent in implementation and testing of hardware components.

37 hardware and firmware vulnerabilities: A guide to the threats

By using an isolated component to process sensitive data, if properly implemented, even a rogue programmer or malware that gets access to system memory on an EC2 instance should not be able to get your encryption keys or data. It never exists anywhere that main EC2 system OS can access it. At least that’s how it should work. I haven’t tested out AWS Nitro Enclaves yet.

Ideally, if you are creating a private key, it is generated on the device that it identifies (or the human that uses that device such as a cell phone) and never accessible to a human. An AWS Nitro Enclave does that.

Here’s when an enclave, TEE, or TPM won’t help you: I once reviewed an identity system where the seeding the system with user identities involved email those initial values to the vendor who then uploaded them to the system. For banking customers. That is not a secure approach and I was told it would be fixed. Identities, Keys, and Certificates can be generated inside the enclave rather than have someone create them externally and then add them in.

Supply chain assessments in relation to hardware and enclaves

The question about the hardware for the device at the security vendor that I had was how would the initial private key be generated that got stored in the TPM? If the device was manufactured in China would employees at that company ever have access to those private keys? Could they obtain a public key and swap it out with their own public key for a device in the middle attack of some kind? This is the level of detail you need to go into if you are worried about supply chain issues in the hardware you purchase. Ask your vendor how they handle these details.

Enclaves and network security

I’ve seen a misunderstanding of the point of enclaves. Although they do block network access to the data, you can do that at other layers in your architecture for lower cost and complexity and before an attack ever gets all the way to your enclave. That would be a far safer choice. You also probably don’t want to wait until you are processing the data in an enclave to block rogue network traffic.

But in case something slips through, an enclave will help by preventing data exfiltration if rogue code should somehow get into the enclave. That also should not happen if you properly develop, test, and sign your code. But as we know, things do happen.

An enclave can’t help you view network traffic to spot an attack that is otherwise invisible once attackers have control of your operating system via a root kit, hardware, or kernel level exploit. I like to say that once your machine is compromised at that level, it can lie to you, but the network doesn’t lie. An attacker with low-level access can change the output of your operating system, tools, and logs. They cannot change network logs captured on separate devices or in-line traffic inspection devices (unless those are also compromised.)

An attack is only useful to an attacker if they can communicate with the system remotely to carry out additional commands or exfiltrate data, and that activity, though it can be hidden deep in network packet headers, must be visible in those packets. If it wasn’t, the data would not be able to get to it’s intended destination or do what the attacker intends for it to do. Your network traffic can reveal things your system cannot in some case, possibly unless you dump and query the memory. And the whole point of an enclave is that you can’t dump the memory.

One of the primary benefits of an enclave — protect data in memory

The point of an isolated environment is not simply to block network access (though it can do that). It is actually to segregate out the data and keys from attacks that can access sensitive data in memory (also referred to as in use). Those type of attacks are difficult to defend against once rogue code has accessed a system.

You may still be able to protect data on disk with an encryption strategy that protects an encryption key and never stores unencrypted data on disk. However, protecting data in memory is significantly more challenging.

Encrypted data is not just useless to attackers — it’s useless to you and your application in and encrypted state. In order to become useful, at some point you have to decrypt it. You want to protect it in its unencrypted state while being processed in memory, but how can you do that?

You have to ensure that no malware can ever infect that system or find a way to crash the system and dump out memory. You have to ensure that there’s no software running on the system that can access the memory inappropriately using commands such as the one I demonstrated and described in this post:

When What You Deleted is Not Really Deleted

How can you do that? What if you put your secrets and sensitive unencrypted data in an enclave not accessible to commands such as the one I just mentioned? There is no command line on which to spit out data. Code in the enclave can be signed and no other rogue code or commands can run except for the software that is supposed to be running in that environment. That’s how we try to protect sensitive data in memory.

Enclaves in the context of the security architecture we are creating

In the last few posts we considered exposure of an SSH key created by a process to assign to a user. We covered a number of security risks and I explained that the safest approach to creating the key might be to do so in an AWS Nitro Enclave.

Automated Creation of an SSH Key for an AWS User

What exactly is an AWS Nitro Enclave?

From the AWS documentation:

An enclave is a virtual machine with its own kernel, memory, and CPUs. It is created by partitioning memory and vCPUs from a Nitro-based parent instance. An enclave has no external network connectivity, and no persistent storage. The enclave’s isolated vCPUs and memory can’t be accessed by the processes, applications, kernel, or users of the parent instance.

So an enclave is a special type of virtual machine that has an isolated processing environment which nothing else can get into:

- No network access so the data can’t be exfiltrated the software running in the enclave.

- No persistent storage so we don’t have to worry about our key residing on disk after creation.

- CPUs and memory are segregated in such a way nothing else can access the data.

A virtual machine sounds like software, but if you check out the AWS Nitro documentation it references a Nitro TPM:

Does an AWS Enclave use a TPM? It doesn’t really say.

This video from an event in DC in 2022 earlier this year talks about TPMs but it doesn’t say if AWS Enclaves use one or not. However, if you need a TPM for an application that protects your encryption keys in a hardware-based solution, you now have access to one. As mentioned above, AWS Nitro also pushes more of the VM architecture into hardware as well.

Ok so an Enclave is very isolated. But if we have no network access, then how can we get our new SSH key into SSM Parameter store or Secrets Manager? A Vsock proxy exists that allows the enclave to communicate with the parent host. That “secure local channel” as AWS describes it, is obviously a critical aspect of security for a Nitro enclave.

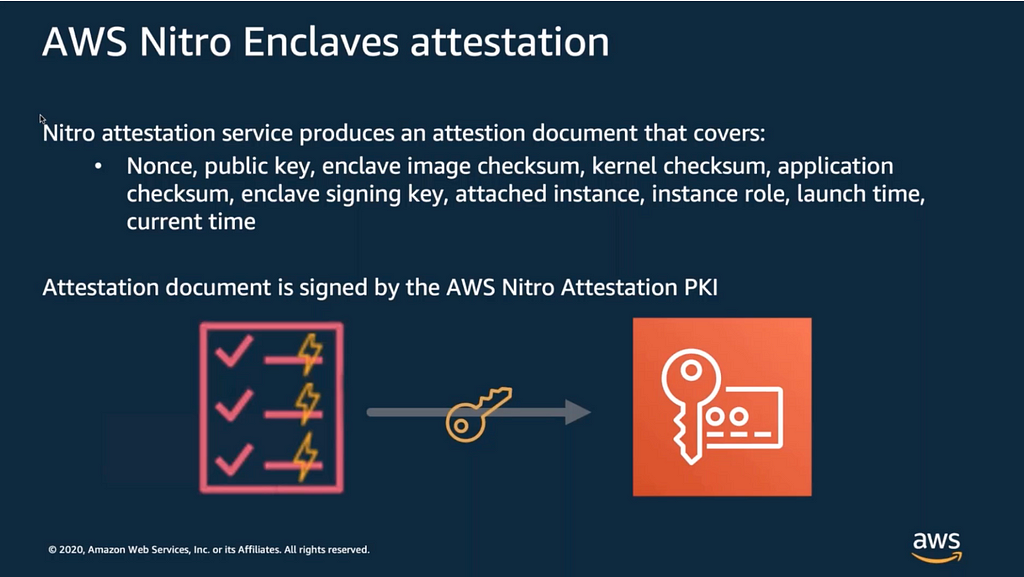

We can also leverage cryptographic attestations for Nitro enclaves when working with third-parties. The attestation document can be verified before initiating processes in the enclave. We aren’t working with a third-party but can review that process to see if it benefits us in any way.

How an enclave helps with encrypted data and secrets

The purpose of an enclave is to protect sensitive data in memory and in use on the host also keep it out of persistent storage that may potentially be accessible to malware or unauthorized users. Enclaves and TPMs also protect private keys that identify a the enclave. The private key is generated in the enclave and never leaves the enclave. That private key can be used to decrypt data encrypted with the associated public key.

Encryption keys will be in memory during the encryption and decryption process. In addition, if you are processing sensitive data, at some point that data must be decrypted in order to be processed. Malware could try to snatch that data out of memory at the point of decryption in memory as explained in the last post.

Decrypting and processing the data in an enclave significantly reduces the chances that malware can get at the data or the encryption key. AWS Nitro Enclaves have a proxy to KMS. You can put encrypted data in the enclave, decrypt it, process it, and then re-encrypt it all in the enclave. The KMS encryption key and the data you need to protect are not exposed in such a way that another process can capture them in memory. The data is never stored to a persistent disk the way we did with our SSH key when we created it.

A revised architecture for creation of SSH keys for users

Our problem with the SSH key is solved if we can create a KMS key that developers can use to decrypt data, but not our IAM administrators. Then, we can grant each user access to only his or her secret in secrets manager to obtain an SSH key.

Will we hit a limit on AWS Secrets Manager if we have a lot of users? The limit per region for secrets is 500,000. For most organizations, no. Even large organizations likely do not have all their users in a single AWS account.

AWS Secrets Manager endpoints and quotas

I already covered cost comparison between Secrets Manager and AWS SSM Parameter Store in a prior post. This seems like our best option.

Implementing our AWS Nitro Enclave Solution

We can follow this tutorial to create our enclave:

Getting started: Hello enclave

The first thing to note is that when we start an ec2-instance on which we want to run an enclave, we need to enable enclaves first of all:

aws ec2 run-instances --image-id ami_id --count 1 --instance-type supported_instance_type --key-name your_key_pair --enclave-options 'Enabled=true'

We can also enable the enclave using CloudFormation:

AWS::EC2::Instance EnclaveOptions

However, it looks like we have to use the AWS Nitro Enclave CLI rather than AWS CloudFormation to deploy the enclave.

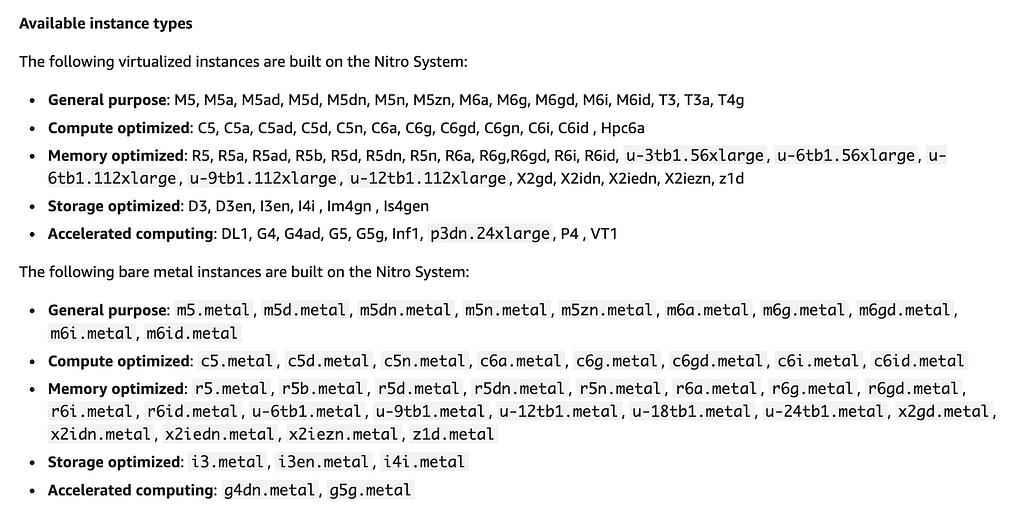

The instance needs to be a Nitro-based instance. From the documentation at the time of this writing these are Nitro-based instances:

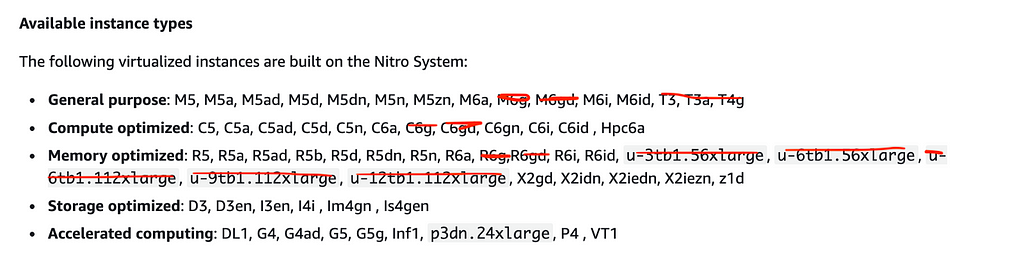

We cannot use one of these types, per the requirements:

except t3, t3a, t4g, a1, c6g, c6gd, m6g, m6gd, r6g, r6gd, and u-*.

So it looks like these are our options:

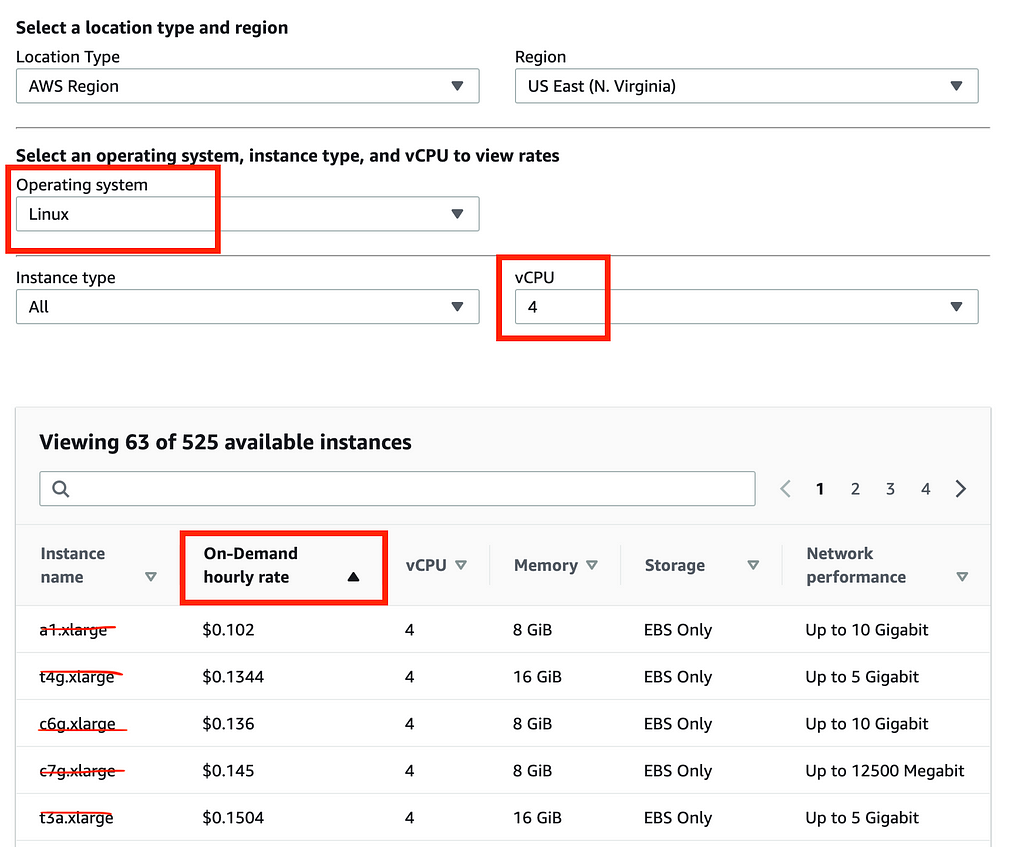

We need at least 4 vCPUs. We can review the list of EC2 instances to find an type that meets our needs:

Amazon EC2 Instance Types - Amazon Web Services

Now I can cross-reference the list above with on-demand EC2 prices to get the lowest cost option. I can filter the list on Linux instances with 4 vCPUs. I sort by On-Demand hourly rate descending, lowest to highest. I start going down the list till I find an available instance type.

Although the enclave is free, you have to use a more expensive instance type to use one. The lowest cost instance type that works at the time of this writing is a c5a.xlarge.

So if there are roughly 730 hours in a month it would cost about $115 per month to run this instance. However, I don’t need to run it at all times. I only need to run it when I need the enclave to create my SSH keys or handle other sensitive operations.

Some other considerations:

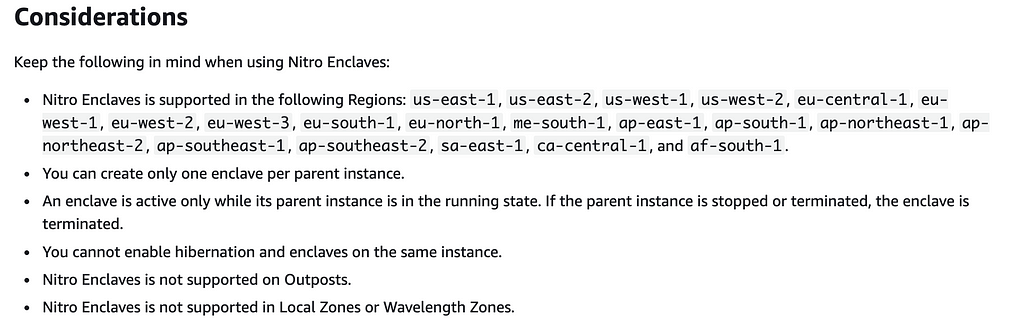

OK so we create an instance that allows us to create an enclave. The enclaved is carved out from the host separately from the main EC2 VM and is essentially another VM that can only communicate with the image from which it is created. AWS supports only one enclave per image but may support more in the future.

How does an AWS KMS Enclave work with KMS?

When you use an enclave with KMS you can create a KMS policy that only allows that specific enclave to use the encryption key to decrypt data. But how does KMS validate that it is allowing the correct enclave to use the encryption key?

Amazon KMS relies on the digital signature for the enclave’s attestation document to prove that the public key in the request came from a valid enclave.

How AWS Nitro Enclaves uses AWS KMS

When you build that image you can get the following to validate the integrity of the image:

- A checksum

- You can sign the image as well

KMS policy says only the image with matching checksum or signature can use that key.

A checksum will ensure that only a very specific image can decrypt data, however every time you rebuild the image it will have an updated checksum. That means you need to update your KMS policy every time the image changes. If you want segregation of duties and to know each time an image changes that’s a good thing.

However, sometimes an organization will use a signature instead and say, “Only images that are signed with our signature can use this decryption key.” The risk in this case is that an attacker obtains the signing key and then can use that to create an image that can decryption the data — and that type of attack has happened in a number of high profile security incidents with large and well known software vendors.

Hackers somehow got their rootkit a Microsoft-issued digital signature

Consider the risk when choosing one option or the other in your key policies.

Attestations

A Nitro Enclave can also provide an attestation to prove that it is the enclave you intend to use. Before sending sensitive data to the enclave you should validate the attestation document provided by the enclave.

Here is a helpful video that offers a deep dive on Nitro and how attestations work, though it seems to still be missing a few details.

Deep Dive Into AWS Nitro Enclaves | AWS Online Tech Talks

A Nitro Enclave generates a local public-private key pair. The private key never leaves the enclave. When the enclave is terminated the keys are gone.

I explained the concepts of asymmetric encryption using public and private keys in my book at the bottom of the post. The private/public key pair helps identify an individual enclave. Since the enclave is the only place where that private key exists, only that enclave will be able to decrypt documents encrypted with its public key.

An enclave requests an attestation document from the AWS Nitro attestation service. It sends the public key and a nonce with the request. The attestation service sends back an attestation document with details about the Nitro enclave. An enclave can provide that attestation document to someone who requests it who should then verify that the attestation document matches the expected enclave before providing any data to it. Only the enclave will be able to decrypt and provide the attestation document.

Here’s the one question I have, however. What’s preventing that enclave from providing the attestation document from another enclave after requesting the attestation document? I didn’t see that in the video. What if someone requested an attestation document from an enclave, then passed it to another enclave, and somehow that enclave then passed out an incorrect attestation for a different enclave? The document would still be signed by the Nitro attestation service.

The person who received the attestation document could take additional steps potentially such as verifying the checksum of the enclave an if they have the public key for that enclave, decrypt the contents of the attestation with it. That document could only be encrypted with the proper private key. How does the person decrypting the document know that they have the correct public key?.

This may be in the documentation somewhere but I can only spend so much time in a day on each post. This one is getting rather long and I’m not going to use an Enclave just yet for reasons mentioned at the bottom of the post.

A question that came to mind was that I wondering if it would be better to get the attestation from a separate service that tracks the attestation similar to how TLS works? But perhaps somehow the PKI architecture solves this problem. I’ll have to dig into it more later.

For our purposes, I don’t think we need an attestation because we will not be sharing data with third parties or using third-party enclaves. I believe we will know which enclave we are using based on other factors, but that would be proven upon implementation and further testing.

Back to our tutorial — what else would we need to do to use an AWS Nitro Enclave?

- Build our code into a docker image

- Use the Nitro CLI to convert the image to a Nitro image

- Run the enclave using the image file

- If running in debug mode, you can view the read-only console output (which should not contain any sensitive data in production!)

Do we need an enclave?

An enclave would certainly be more secure than the method I used in the last post to create an SSH key, presuming I can get the encrypted data into SSM and/or Secrets manager from the enclave. I’d need to look at how that works because you’d get back encrypted data from the enclave, and then you’d transport that to KMS or SSM. The reason you likely can’t use the approach we did in prior posts is that Secrets Manager and SSM would require network access.

This adds complexity and cost to our design and right at this moment. I want to get through some other portions of the architecture first. I feel that the steps we are taking are reasonably secure for a POC but if I was accessing highly sensitive data, I might opt to implement the enclave before proceeding. We may also be able to leverage other controls to prevent access to the host that generates the SSH key which is created and immediately shred. In fact, I’m going to change the code to never store the key to disk, for one thing.

Alternatively, what if we want to use AWS Batch to run our code? I don’t see any way to use AWS Batch with a Nitro enclave and confirmed with someone at AWS via Twitter that AWS Batch does not leverage enclaves. How data is isolated and protected depends on your selected compute environment — a topic we will explore more later.

What if we run our automation scripts in a locked down batch process that no one can alter or access and therefore they cannot access the key? Perhaps that is another way to create credentials in a manner that provides our desired non-repudiation.

Another option would be to create a process for users to create their own SSH key. We could create a policy that allows a user to create an SSH key themselves but that only matches their own user name. That way only the specified user would ever have their own SSH key. Then they could use an automated process to deploy an approved EC2 instance using that key.

Although I would really like to implement a Nitro Enclave because it would be super interesting, I’m going to finish some other things first. Before we can even deploy the enclave we need an EC2 instance and that’s why we are creating this SSH key in the first place — so we can use it with an EC2 instance and log into it. Lots of catch-22’s in security. (22, SSH, pun not intended.)

Stay tuned for more and possibly I’ll revisit this topic in the future.

Teri Radichel

If you liked this story please clap and follow:

Medium: Teri Radichel or Email List: Teri Radichel

Twitter: @teriradichel or @2ndSightLab

Requests services via LinkedIn: Teri Radichel or IANS Research

© 2nd Sight Lab 2022

All the posts in this series:

Automating Cybersecurity Metrics (ACM)

____________________________________________

Author:

Cybersecurity for Executives in the Age of Cloud on Amazon

Need Cloud Security Training? 2nd Sight Lab Cloud Security Training

Is your cloud secure? Hire 2nd Sight Lab for a penetration test or security assessment.

Have a Cybersecurity or Cloud Security Question? Ask Teri Radichel by scheduling a call with IANS Research.

Cybersecurity & Cloud Security Resources by Teri Radichel: Cybersecurity and Cloud security classes, articles, white papers, presentations, and podcasts

AWS offers a Nitro TPM was originally published in Cloud Security on Medium, where people are continuing the conversation by highlighting and responding to this story.

0 Comments